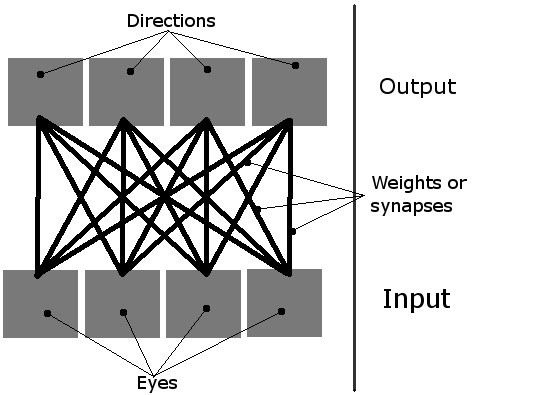

I'm making a simple learning simulation, where there are multiple organisms on screen. They're supposed to learn how to eat, using their simple neural networks. They have 4 neurons, and each neuron activates movement in one direction (it's a 2D plane viewed from the bird's perspective, so there are only four directions, thus, four outputs are required). Their only input is four "eyes". Only one eye can be active at the time, and it basically serves as a pointer to the nearest object (either a green food block or another organism).

Thus, the network can be imagined like this:

And an organism looks like this (both in theory and the actual simulation, where they really are red blocks with their eyes around them):

And this is how it all looks (this is an old version, where eyes still didn't work, but it's similar):

Now that I have described my general idea, let me get to the heart of the problem...

Initialization| First, I create some organisms and food. Then, all the 16 weights in their neural networks are set to random values, like this: weight = random.random()*threshold*2. The threshold is a global value that describes how much input each neuron needs to get in order to activate ("fire"). It is usually set to 1.

Learning| By default, the weights in the neural networks are lowered by 1% each step. But, if some organism actually manages to eat something, the connection between the last active input and output is strengthened.

But, there is a big problem. I think that this isn't a good approach, because they don't actually learn anything! Only those that had their initial weights randomly set to be beneficial will get a chance of eating something, and then only them will have their weights strengthened! What about those that had their connections set up badly? They'll just die, not learn.

How do I avoid this? The only solution that comes to mind is to randomly increase/decrease the weights so that eventually, someone will get the right configuration, and eat something by chance. But I find this solution to be very crude and ugly. Do you have any ideas?

Thank you for your answers! Every single one of them was very useful, some were just more relevant. I have decided to use the following approach:

Set all the weights to random numbers.

Decrease the weights over time.

Sometimes randomly increase or decrease weight. The more successful the unit is, the less its weights will get changed. NEW

When an organism eats something, increase the weight between the corresponding input and the output.