I had the same problem and it turned out that since I was submitting my application in client mode, then the machine I ran the spark-submit command from was running the driver program and needed to access the module files.

I added my module to the PYTHONPATH environment variable on the node I'm submitting my job from by adding the following line to my .bashrc file (or execute it before submitting my job). You can do:

export PYTHONPATH=$PYTHONPATH:/home/welshamy/modules

And that solved the problem. Since the path is on the driver node, you don't have to zip and ship the module with --py-files or use sc.addPyFile().

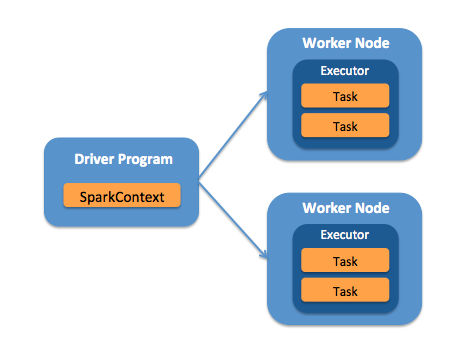

The key to solve any pyspark module import error problem is understanding whether the driver or worker (or both) nodes need the module files.