Your cart is currently empty.

This certification program is in collaboration with E&ICT, IIT Guwahati, and aims to provide extensive training on Big Data Analytics concepts such as Hadoop, Spark, Python, MongoDB, Data Warehousing, and more. This program warrants providing a complete experience to learners in terms of understanding the concepts, mastering them thoroughly, and applying them in real life.

Learning Format

Online

Live Classes

9 Months

Career Services

by Intellipaat

E&ICT IIT Guwahati

Certification

EMI Starts

at ₹8,000/month*

This certification program in Big Data Analytics will provide you with academic rigor along with industry exposure. The course is designed and created under the mentorship of the top faculties of IIT Guwahati.

Partnering with E&ICT, IIT Guwahati

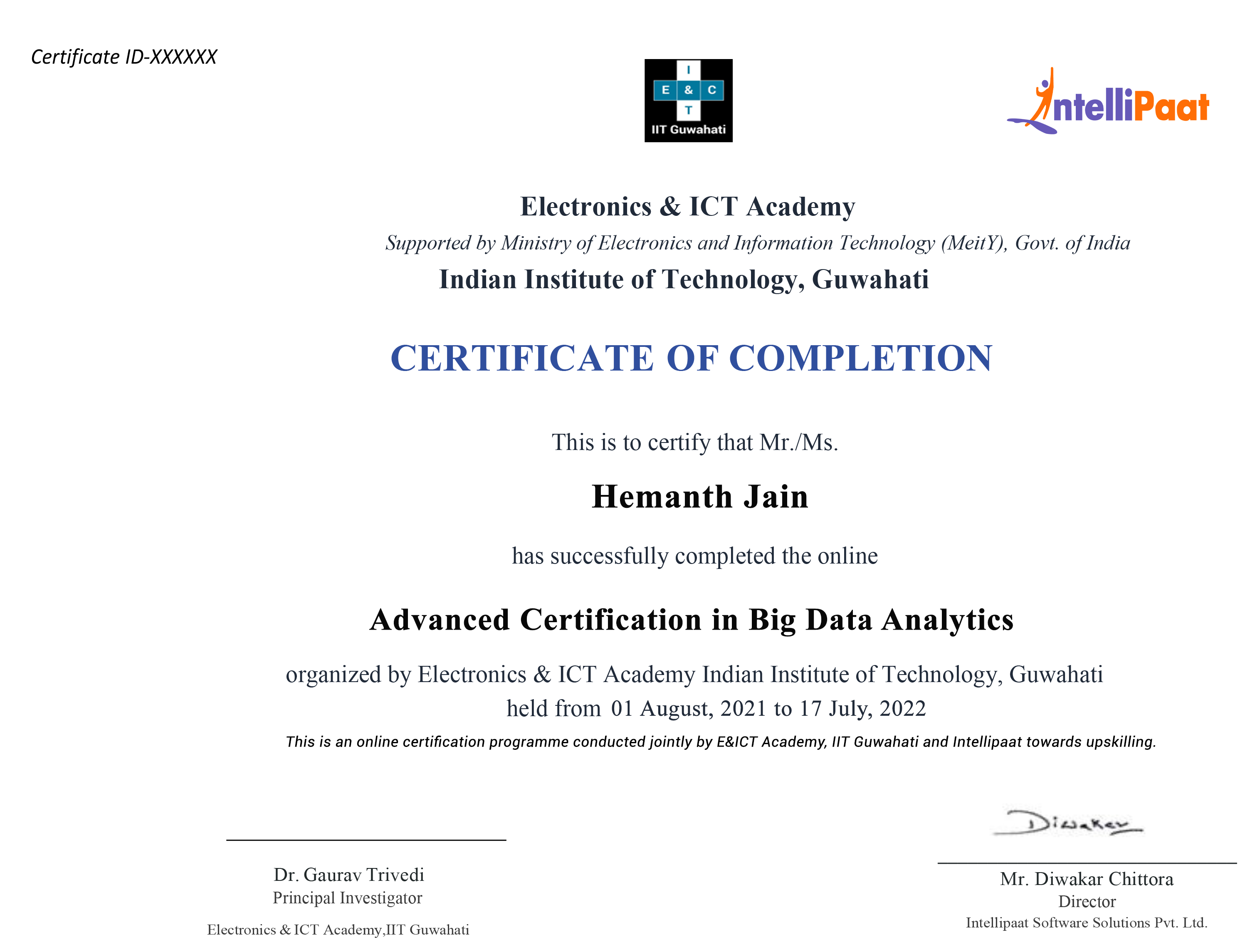

This Certification Program in Big Data Analytics is in partnership with E&ICT Academy IIT Guwahati. E&ICT IIT Guwahati is an initiative of the Ministry of Electronics and Information Technology (MeitY), Govt. of India in collaboration with the team of IIT Guwahati professors to provide high-quality education programs.

Upon completion of this program, you will:

Builds and manages a personalized pluggable service-based framework to allow import, cleansing, transformation, and validation of data.

Transforms raw data into meaningful insights and presents data in a meaningful form for business users.

Identifies problems, understands data sets, collects & cleans large data sets, creates data models, and performs data mining.

Develops data pipelines and design necessary solutions to resolve complex issues.

Designs, creates, and tests scalable and robust elements of data platforms and provides solutions for various problems.

Extract required data for tasks like business analysis, and build reports, metrics, and dashboards for performance monitoring.

Skills to Master

Big Data

Hadoop

Spark

Statistics

Data Science

Machine Learning

SQL

Python

Scala

Real-time Streaming

Data Mining

Business Intelligence

AWS Big Data

Tools to Master

Python

Hands-On:

Hands-On:

Hands-On:

Power BI Basics

DAX

Data Visualization with Analytics

Hands-on Exercise:

Creating a dashboard to depict actionable insights in sales data.

The projects will be a part of your certification in big data analytics to consolidate your learning. Industry-based projects will ensure that you gain real-world experience before starting your career in big data.

Practice 100+ Essential Tools

Designed by Industry Experts

Get Real-world Experience

Admission Details

The application process consists of three simple steps. An offer of admission will be made to selected candidates based on the feedback from the interview panel. The selected candidates will be notified over email and phone, and they can block their seats through the payment of the admission fee.

Submit Application

Tell us a bit about yourself and why you want to join this program

Application Review

An admission panel will shortlist candidates based on their application

Admission

Selected candidates will be notified within 1–2 weeks

This program is conducted online for 9 months with the help of multiple live instructor-led training sessions.

Intellipaat provides career services that include guaranteed interviews for all the learners enrolled in this course. EICT IIT Guwahati is not responsible for the career services.

After you share your basic details with us, our course advisor will speak to you and based on the discussion, your application will be screened. If your application is shortlisted, you will need to fill in a detailed application form and attend a telephonic interview, which will be conducted by a subject matter expert. Based on your profile and interview, if you are selected, you will receive an admission offer letter.

This program must be completed over the course of nine months by attending live courses and finishing the assigned tasks.

If by any circumstance you miss a live class, you will be given the recording of the class within the next 12 hours. Also, if you need any support, you will have access to our 24/7 technical support team for any sort of query resolution.

To complete this program, you will have to spare around six hours a week for learning. Classes will be held over weekends (Sat/Sun), and each session will be for three hours.

To ensure that you make the most of this program, you will be given industry-grade projects to work on. This is done to make sure that you get a concrete understanding of what you’ve learned.

Upon the completion of this program, you will be first preparing for job interviews through mock interview sessions, and then you will get assistance in preparing a resume that fulfills industry standards. This will be followed by a minimum of three exclusive interviews with 400+ hiring partners across the globe.

Upon the completion of all of the requirements of the program, you will be awarded a certificate from E&ICT Academy IIT, Guwahati.

There will be a two-day campus immersion module at E&ICT Academy, IIT-Guwahati during which learners will visit the campus. You will learn from the faculty as well as interact with your peers. However, this is subject to the COVID-19 situation and guidelines provided by the Institute. The cost of travel and accommodation will be borne by the learners. However, the campus immersion module is optional.

To be eligible for getting into the placement pool, the learner has to complete the course along with the submission of all projects and assignments. After this, he/she has to clear the Placement Readiness Test (PRT) to get into the placement pool and get access to our job portal as well as the career mentoring sessions.

Please note that the course fees is non-refundable and we will be at every step with you for your upskilling and professional growth needs.

Due to any reason you want to defer the batch or restart the classes in a new batch then you need to send the batch defer request on [email protected] and only 1 time batch defer request is allowed without any additional cost.

Learner can request for batch deferral to any of the cohorts starting in the next 3-6 months from the start date of the initial batch in which the student was originally enrolled for. Batch deferral requests are accepted only once but you should not have completed more than 20% of the program. If you want to defer the batch 2nd time then you need to pay batch defer fees which is equal to 10% of the total course fees paid for the program + Taxes.

Yes, Intellipaat certification is highly recognized in the industry. Our alumni work in more than 10,000 corporations and startups, which is a testament that our programs are industry-aligned and well-recognized. Additionally, the Intellipaat program is in partnership with the National Skill Development Corporation (NSDC), which further validates its credibility. Learners will get an NSDC certificate along with Intellipaat certificate for the programs they enroll in.

What is included in this course?

Click to Zoom

Click to Zoom