Your cart is currently empty.

Watch

Course PreviewThis online Data Science course, in collaboration with iHUB, IIT Roorkee & Microsoft, will help you to elevate your Data Science career. In this course you will master skills like Python, SQL, Statistics, Machine Learning, AI, Power BI & Generative AI, along with real-time industry-oriented projects.

In this advanced Data Science Certification program, you will undergo 10+ Data Science Courses along with multiple case studies and industry-oriented project work.

Online Instructor-led Interactive Sessions:

Additionally, Data Science Capstone Projects and multiple electives will be provided to enhance your knowledge in the Data Science & AI domain with this data science course

In this data science course, you will master the key skills to become a successful data scientist, such as:

Linux: Learn basic Linux commands to gain expertise with Linux command line. Most of the production systems in the world runs on Linux.

Python For Data Science: Master practical skills with Python programming & its data science libraries like Pandas, Numpy, Matplotlib, etc. Get hands on with ETL (Extract, transform, Load) in this data science course.

Statistics: To become a successful data scientist, one needs to have decent knowledge of mathematics like linear algebra, & more.

Machine Learning: Master supervised and unsupervised algorithms with this program and solve business problems using algorithms.

Artificial Intelligence: Understand how neural network works, its implementation in the business. Work on projects & create your own AI applications.

Power BI: Master most widely used visualization tool by Microsoft. Learn how to create complex interactive dashboards and management reports.

MLOps: Once you have mastered the Machine Learning algorithms, it’s a time to deploy the machine learning model you have created on the cloud platform, like AWS or Azure.

MS Excel: Learn Excel analytics from scratch to master data transformation, data manipulation, data modelling and reporting.

Generative AI: Master prompt engineering and tools like ChatGPT to build data science application at lightning speed.

Data Scientists are in high demand and offered high-paying jobs. This allows for diverse career opportunities in data science, along with innovation and solving complex business problems. We live in an AI era where Data Scientists enjoy global job prospects and the ability to influence key decisions in the organization.

Some of the core responsibilities of data scientists are:

Our data science certification course will help you to master data scientist skills in just 7 months.

Talk To Us

We are happy to help you 24/7

55% Average Salary Hike

45 LPA The Highest Salary

12000+ Career Transitions

500+ Hiring Partners

Career Transition Handbook

*Past record is no guarantee of future job prospects

Design and implement scalable applications using AI & ML algorithms.

Develop application for fixing data quality issues and get data insights.

Build LLM to build innovative applications for the future.

Perform design, build and maintain the infrastructure to handle large volume of data and large-scale applications

Works on Data Analysis, Model building and data cleansing. Uncover data insights & solve business problems

Develop and deploy machine learning solutions to solve real world problems.

Python Programming

SQL

Story Telling

Inferential Statistics

Machine Learning

Mathematical Modelling

Descriptive Statistics

Data Analysis

Generative AI

Prompt Engineering

ChatGPT

Artificial Intelligence

Large Learning Models

Supervised Learning

Unsupervised Learning

MLOps

Data Visualization

Conversational AI

Ensemble Learning

Exploratory Data Analysis

Data Science

Data Mining

Statistical Learning

Research Methods

Hypothesis Testing

Statistical Analysis

EMI Starts at

₹5,000

We partnered with financing companies to provide very competitive finance options at 0% interest rate

Financing Partners

![]()

Contact Us

Python

Linux

Supervised Learning – Classification and Regression Implementation

Unsupervised Learning – K-means clustering and Dimensionality reduction

Data Science Projects

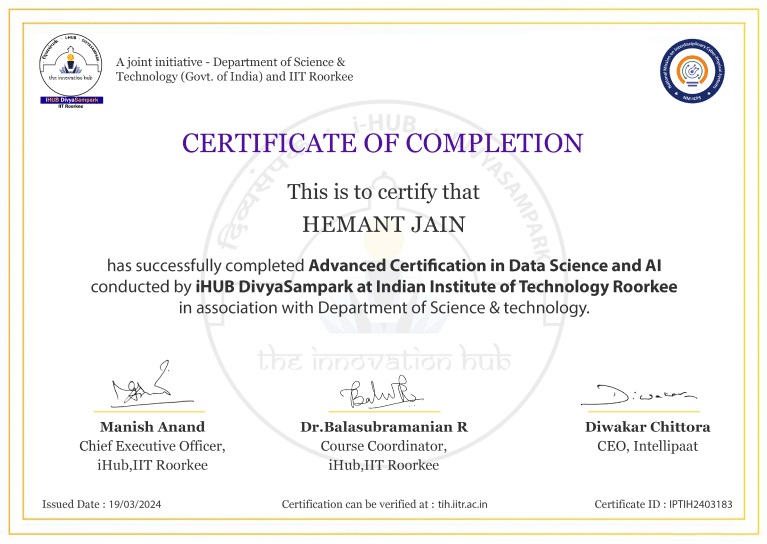

Master Data Science Skills & Earn Your Data Scientist Certificate

Experience Campus Immersion at iHub IIT Roorkee & Build Formidable Networks With Peers & IIT Faculty

Land Your Dream Job Like Our Alumni

In India, Data Scientist’s salaries have been rising with entry-level positions getting ₹9,99,593 per year whereas experienced professionals earn up to ₹20,00,000 annually. In the USA, the average annual salary is approximately $101,264 with entry-level roles starting around $117,276 and experienced professionals earning up to $190,000.

This Data Science course is of 7 months and we expect you to put 8 hrs per week to attend the live sessions and complete assignments, case studies, and project work.

You will get the below-mentioned learning in our data science course.

Data scientists are responsible for collecting, processing, and analyzing data from different data sources. They create predictive models and interpret the data to gain meaningful insights. The data insights are communicated to business teams to make informed business decisions.

Python is the most popular and preferred language for building Data Science applications. It is an easy-to-use, easy-to-learn, and open-source programming language. Apart from this R and SQL are used by Data Scientists. In our Data Science course all these programming languages will be covered.

Here are the steps for getting into the placement pool:

Upon clearing the PRT learner will get access to the dedicated jobs from Intellipaat as well as the career mentoring sessions.

Yes, certainly this data science training course will help you land in data science jobs upon online course completion.

To become a data scientist, you need to have good mathematics & Python knowledge along with data visualization tools like Power BI. The knowledge of Machine Learning and AI algorithms with hands-on exposure is vital. Our online data science course will help you attain these required skills.

The decision between a Data Science course and a data analytics course depends on your goals. Data Science is broader and focuses on gaining insights, creating models, and solving complex problems using various techniques. Data Science is best suited for those interested in research, and innovation with a decent grasp of coding skills.

On the other hand, data analysis is more about interpreting existing data to make data-driven decisions. If you enjoy playing with data and contribute to business strategies, data analytics may be a better fit.

We provide 24/7 support to our learners for their Data Science doubt resolutions. You can get the required support using our dedicated chat support or directly raise the ticket from the LMS. You can also avail 1:1 session for doubt clearance with our Teaching Assistant (TA) team.

No, our job assistance is aimed at helping you land your dream job. It offers a potential opportunity for you to explore various competitive openings in the corporate world and find a well-paid job, matching your profile. The final hiring decision will always be based on your performance in the interview and the requirements of the recruiter.

Intellipaat provides a variety of options to study Data Science which include certification and a Master’s degree program. You can choose any of the programs as per your aspiring career goals. All the courses are taught by top faculty and Industry experts. For more details, you can visit these similar Data Science Courses:

Artificial Intelligence Course

Machine Learning Certification Course

Yes, as per our refund policy, once the enrolment is done, no refund is applicable.

All candidates applying for this course are eligible for equity-based seed funding and incubation support from iHUB DivyaSampark, IIT Roorkee, for their startup ideas. Enrolled students will have the opportunity to pitch their ideas to the iHUB DivyaSampark team, and shortlisted proposals may receive funding of up to ₹50 lakh, along with full incubation support.

Additionally, candidates who are currently enrolled in a degree program and have their startup idea approved may also receive a monthly fellowship/scholarship of ₹8,000 during the early phase of their project to encourage and support innovation.

Click to Zoom

Click to Zoom