Your cart is currently empty.

This Advanced Certification in Data Science and Data Engineering course is specially designed to help you master skills necessary for you to land a job in this field. This online program covers essential concepts like the Data Analysis using Python , Data Wrangling, Machine Learning, Azure Data Engineering, Deep Learning, NLP, etc. The course is designed in a way to help you gain the relevant skills required to land you your dream job.

Learning Format

Online Bootcamp

Live Classes

9 months

E&ICT IIT Guwahati

Certification

3 Guaranteed Interviews

by Intellipaat

EMI Starts

at ₹8,000/month*

The program offers complete advanced certification training for those wishing to pursue a career in Data Science & Data Engineering. The course curriculum is led by the leading faculty of IIT Guwahati and designed for aspiring Data Scientists and Data Engineers who want to land themselves in top organizations.

About E&ICT, IIT Guwahati

This advanced certification program is in partnership with E&ICT Academy, IIT Guwahati. E&ICT, IIT Guwahati is an initiative of MeitY (Ministry of Electronics and Information Technology, Govt. of India) and formed with the team of IIT Guwahati professors to provide high-quality education programs.

Achievements of IIT Guwahati

Upon the completion of this program, you will receive:

55% Average Salary Hike

$1,20,000 Highest Salary

12000+ Career Transitions

400+ Hiring Partners

*Past record is no guarantee of future job prospects

Understand the issues and create models based on the data gathered, and also manage a team of Data Scientists.

Create and manage pluggable service-based frameworks that are customized in order to import, cleanse, transform, and validate data.

Responsible for conceptualizing, developing, testing, and implementing various advanced Statistical models on business data.

Responsible for assessing the feasibility of migrating customer solutions and/or integrating with third-party systems both Microsoft and non-Microsoft platforms.

Extract data from the respective sources to perform business analysis, and generate reports, dashboards, and metrics to monitor the company’s performance.

Responsible for gathering and translating business requirements into technical specifications and designing and developing data pipelines.

Skills to Master

Python

Linux

Data Science

SQL

Data Analytics

Machine Learning

Data Wrangling

NLP

Azure Data Engineering

Deep Learning

Data Visualization

Tools to master

1. Python

2. Linux

1. Extract Transform Load

2. Data Handling with NumPy

3. Data Manipulation Using Pandas

4. Data Preprocessing

5. Scientific Computing with Scipy

Hands-on Exercise:

6. Data Visualization

1. SQL Basics –

2. Advanced SQL –

3. Deep Dive into User Defined Functions

4. SQL Optimization and Performance

1. Basic Mathematics – Linear Algebra, MultiVariate Calculus

2. Descriptive Statistics –

3. Probability

4. Inferential Statistics –

1. Introduction to Machine learning

2. Supervised Learning

3. Unsupervised Learning

4. Performance Metrics

1 – Non-Relational Data Stores and Azure Data Lake Storage

1.1 Document data stores

1.2 Columnar data stores

1.3 Key/value data stores

1.4 Graph data stores

1.5 Time series data stores

1.6 Object data stores

1.7 External index

1.8 Why NoSQL or Non-Relational DB?

1.9 When to Choose NoSQL or Non-Relational DB?

1.10 Azure Data Lake Storage

Definition, Azure Data Lake-Key Components, How it stores data? Azure Data Lake Storage Gen2, Why Data Lake? Data Lake Architecture

2 – Data Lake and Azure Cosmos DB

2.1 Data Lake Key Concepts

2.2 Azure Cosmos DB

2.3 Why Azure Cosmos DB?

2.4 Azure Blob Storage

2.5 Why Azure Blob Storage?

2.6 Data Partitioning: Horizontal partitioning, Vertical partitioning, Functional partitioning

2.7 Why Partitioning Data?

2.8 Consistency Levels in AzureCosmos DB: Semantics of the five-consistency level

3 – Relational Data Stores

3.1 Introduction to Relational Data Stores

3.2 Azure SQL Database – Deployment Models, Service Tiers

3.3 Why SQL Database Elastic Pool?

4 – Why Azure SQL?

4.1 Azure SQL Security Capabilities

4.2 High-Availability and Azure SQL Database: Standard Availability Model, Premium Availability Model

4.3 Azure Database for MySQL

4.4 Azure Database for PostgreSQL

4.5 Azure Database for MariaDB

4.6 What is PolyBase and Why PolyBase?

4.7 What is Azure Synapse Analytics (formerly SQL DW): SQL Analytics and SQL pool in Azure Synapse, Key component of a big data solution, SQL Analytics MPP architecture components

5 – Azure Batch

5.1 What is Azure Batch?

5.2 Intrinsically Parallel Workloads

5.3 Tightly Coupled Workloads

5.4 Additional Batch Capabilities

5.5 Working of Azure Batch

6 – Azure Data Factory

6.1 Flow Process of Data Factory

6.2 Why Azure Data Factory

6.3 Integration Runtime in Azure Data Factory

6.4 Mapping Data Flows

7 – Azure Data Bricks

7.1 What is Azure Databricks?

7.2 Azure Spark-based Analytics Platform

7.3 Apache Spark in Azure Databricks

8 – Azure Stream Analytics

8.1 Working of Stream Analytics

8.2 Key capabilities and benefits

8.3 Stream Analytics Windowing Functions: Tumbling window, Hopping Window, Sliding Window, Session Window

1. Artificial Intelligence Basics

2. Neural Networks

3. Deep Learning

1. Text Mining, Cleaning, and Pre-processing

2. Text classification, NLTK, sentiment analysis, etc

3. Sentence Structure, Sequence Tagging, Sequence Tasks, and Language Modeling

4. AI Chatbots and Recommendations Engine

1. Introduction to MLOps

2. Deploying Machine Learning Models

1. Power BI Basics

2. DAX

3. Data Visualization with Analytics

1. Recommendation Engine – The case study will guide you through various processes and techniques in machine learning to build a recommendation engine that can be used for movie recommendations, Restaurant recommendations, book recommendations, etc.

2. Rating Predictions – This text classification and sentiment analysis case study will guide you towards working with text data and building efficient machine learning models that can predict ratings, Sentiments, etc.

3. Census – Using predictive modeling techniques on the census data, you will be able to create actionable insights for a given population and create machine learning models that will predict or classify various features like total population, user income, etc.

4. Housing – This real estate case study will guide you towards real-world problems, where a culmination of multiple features will guide you towards creating a predictive model to predict housing prices.

5. Object Detection – A much more advanced yet simple case study that will guide you toward making a machine-learning model that can detect objects in real-time.

6. Stock Market Analysis – Using historical stock market data, you will learn about how feature engineering and feature selection can provide you with some really helpful and actionable insights for specific stocks.

7. Banking Problem – A classification problem that predicts consumer behavior based on various features using machine learning models.

8. AI Chatbot – Using the NLTK python library, you will be able to apply machine learning algorithms and create an AI chatbot.

All the projects included in this program are aligned with the industry demands and standards. These industry-oriented projects will test your level of knowledge in the Cyber Security domain and also help you get exposure to real-life scenarios.

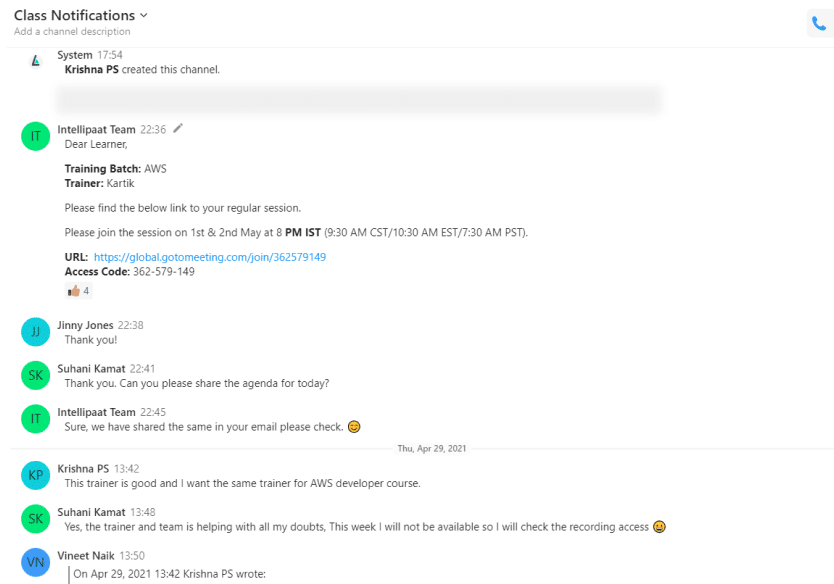

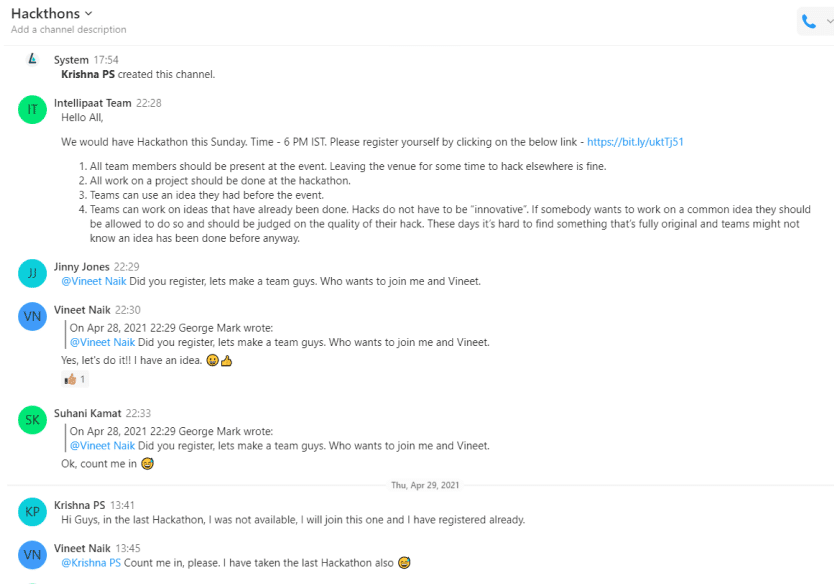

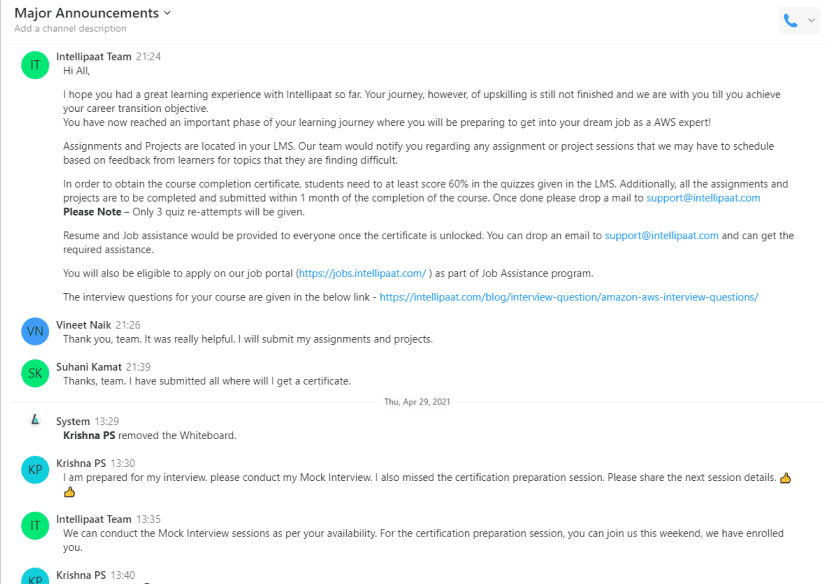

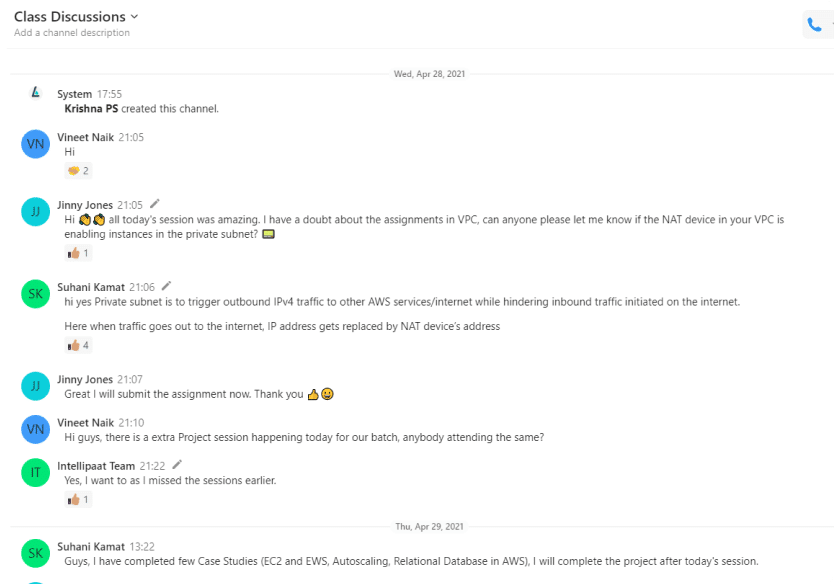

Via Intellipaat PeerChat, you can interact with your peers across all classes and batches and even our alumni. Collaborate on projects, share job referrals & interview experiences, compete with the best, make new friends – the possibilities are endless and our community has something for everyone!

Admission Details

The application process consists of three simple steps. An offer of admission will be made to selected candidates based on the feedback from the interview panel. The selected candidates will be notified over email and phone, and they can block their seats through the payment of the admission fee.

Submit Application

Tell us a bit about yourself and why you want to join this program

Application Review

An admission panel will shortlist candidates based on their application

Admission

Selected candidates will be notified within 1–2 weeks

Total Admission Fee

EMI Starts at

We partnered with financing companies to provide very competitive finance options at 0% interest rate

Financing Partners

![]()

Admissions close once the required number of students is enrolled for the upcoming cohort. Apply early to secure your seat.

Next Cohorts

| Date | Time | Batch Type | |

|---|---|---|---|

| Program Induction | 26th Nov 2022 | 08:00 PM IST | Weekend (Sat-Sun) |

| Regular Classes | 26th Nov 2022 | 08:00 PM IST | Weekend (Sat-Sun) |

The Advanced Certification in Data Science and Data Engineering course is conducted by leading experts from EICT, IIT Guwahati and Intellipaat who will make you proficient in these fields through online video lectures and projects. They will help you gain in-depth knowledge in Data Science, apart from providing hands-on experience in these domains through real-time projects.

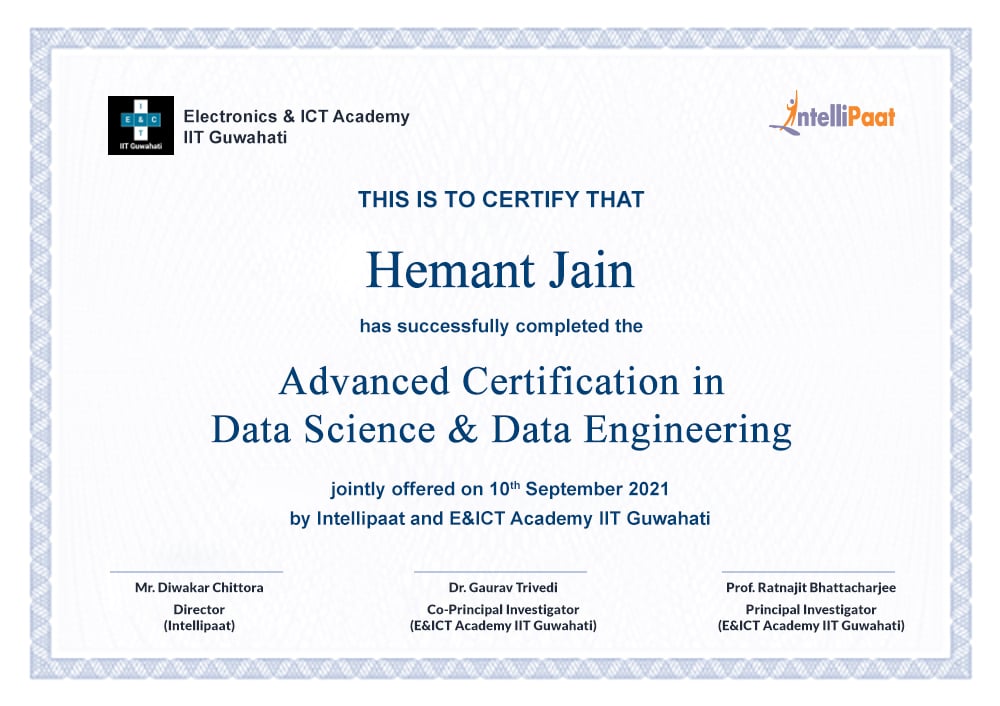

After completing the course and successfully executing the assignments and projects, you will gain an Advanced Certification in Data Science and Data Engineering from Intellipaat and EICT, IIT Guwahati which will be recognized by top organizations around the world. Also, our job assistance team will prepare you for your job interview by conducting several mock interviews, preparing your resume, and more.

Intellipaat provides career services that include Guarantee interviews for all the learners who successfully complete this course. EICT IIT Guwahati is not responsible for the career services.

To complete this program, it requires 9 months of attending live classes and completing the assignments and projects along the way.

If, by any circumstance, you’ve missed the live class, you will be given a recording of the class you missed within the next 12 hours. Also, if you need any support, you will have access to 24*7 technical support for any sort of query resolution and help.

To complete this program, you will have to spare around 6 hours a week to learn. Classes will be held over weekends (Sat/ Sun) and each session will of 3 hrs.

Upon completion of all of the requirements of the program, you will be awarded a certificate from E&ICT, IIT Guwahati.

What is included in this course?

Click to Zoom

Click to Zoom